Hello all. Today we will discuss three methods to upload files in your NextJS app. Each method has its specific use case, so read until the end.

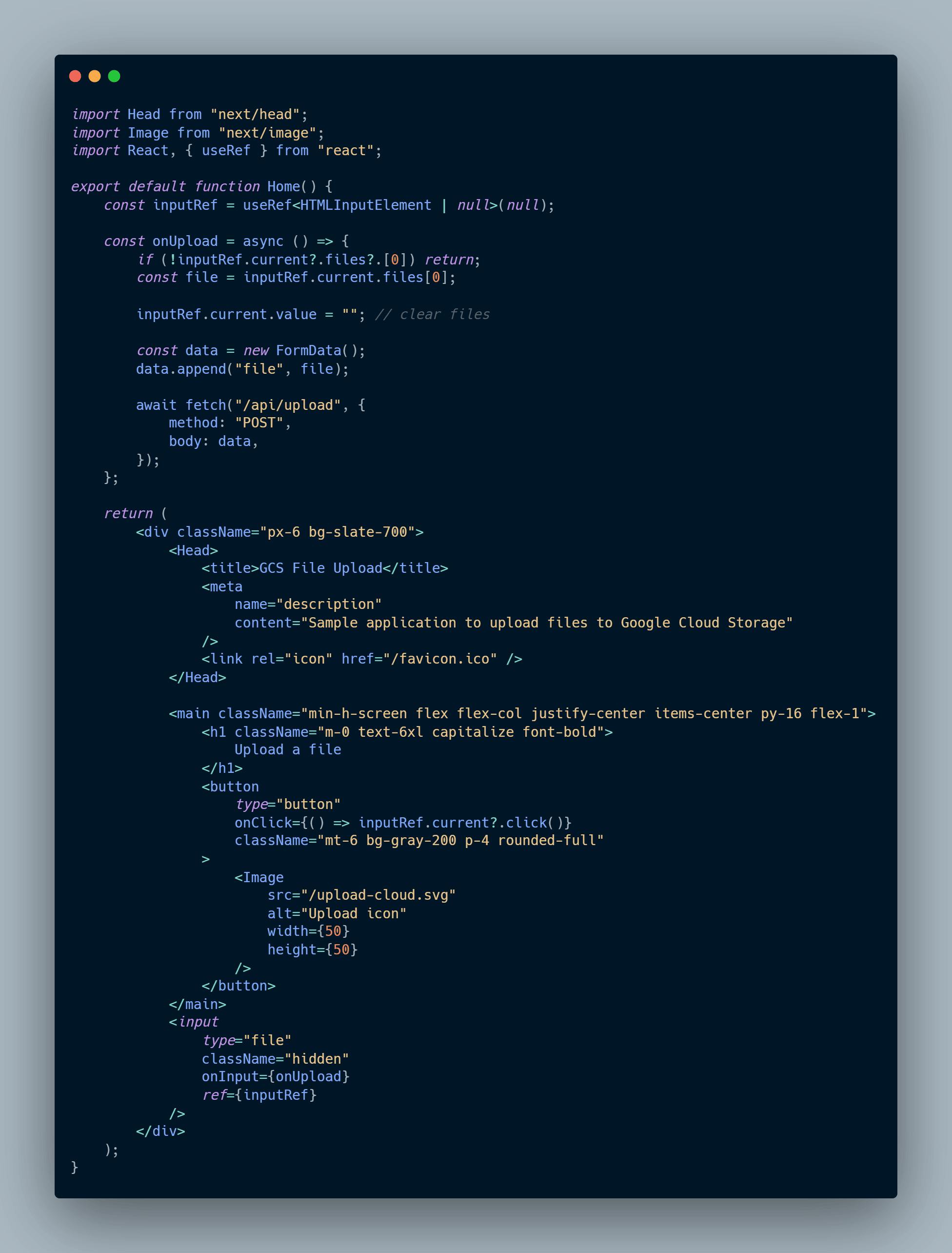

To get started, I have created a basic page where the user can upload a file to a /api/upload endpoint.

When the user clicks the button, we trigger a hidden file input. And when a new file is added, we send a POST request containing the file data.

Note the icon comes from Feather.

First of all, ensure you have access to a Google Cloud Storage bucket. Now you will need a service account key file. If you don't have one, follow the steps listed here to get one.

Initialising GCS

npm i @google-cloud/storage

Create a new folder named lib and a file called gcs.ts within it. Now export a function that opens a write stream for a specified filename within the bucket.

import { Storage } from "@google-cloud/storage";

const storage = new Storage({

keyFilename: "KEY_FILENAME.json",

});

const bucket = storage.bucket(process.env.GCS_BUCKET as string);

export const createWriteStream = (filename: string, contentType?: string) => {

const ref = bucket.file(filename);

const stream = ref.createWriteStream({

gzip: true,

contentType: contentType,

});

return stream;

};

1. API Route

We use node-formidable to handle file uploads in a NextJS API route. But by default, file parsing won't work in serverless environments. We will then have to install formidable-serverless. As a result, handling file uploads should work when deploying to Vercel, Netlify, etc.

npm i formidable

npm i -D @types/formidable

And here is the code to "promisify" form parsing.

import formidable from "./formidable-serverless";

import IncomingForm from "formidable/Formidable";

import { IncomingMessage } from "http";

const parseForm = async (

form: IncomingForm,

req: IncomingMessage

): Promise<{ fields: formidable.Fields; files: formidable.Files }> => {

return await new Promise(async (resolve, reject) => {

form.parse(req, function (err, fields, files) {

if (err) return reject(err);

resolve({ fields, files });

});

});

};

export default parseForm;

Also note that we are putting the code from formidable-serverless into our folder. The current version on NPM doesn't use the latest version of formidable.

Now, define a handler function in an API route file which only accepts POST and calls method1.

import type { NextApiRequest, NextApiResponse } from "next";

import { method1 } from "../../lib/upload";

export default async function handler(

req: NextApiRequest,

res: NextApiResponse

) {

if (req.method !== "POST") {

res.status(400).send(`Invalid method: ${req.method}`);

return;

}

method1(req, res);

}

export const config = {

api: {

bodyParser: false,

},

};

We disable the NextJS body parser to let formidable do its thing (with body streams).

Now we can create a upload.ts file in lib, which handles the upload.

formidable uploads files in a temporary file on the server, so we can call a good ol' fs.createReadStream. Then we can follow with a .pipe to a GCS Bucket Storage write stream.

import formidable from "./formidable-serverless";

import { createReadStream } from "fs";

import { IncomingMessage } from "http";

import { NextApiRequest, NextApiResponse } from "next";

import parseForm from "./parseForm";

import * as gcs from "./gcs";

import { Response } from "express";

export const method1 = async (

req: NextApiRequest | IncomingMessage,

res: NextApiResponse | Response

) => {

const form = formidable();

const { files } = await parseForm(form, req);

const file = files.file as any;

createReadStream(file.path)

.pipe(gcs.createWriteStream(file.name, file.type))

.on("finish", () => {

res.status(200).json("File upload complete");

})

.on("error", (err) => {

console.error(err.message);

res.status(500).json("File upload error: " + err.message);

});

};

This method is most suitable if you wish to run any preprocessing on the file before sending it to GCS. For example, you may want to extract metadata such as duration, bitrate and title from an audio file.

But if you don't want the file on the host machine, you can still upload it to GCS directly.

2. API Routes with Direct Streams

Now let's define method2. It will use the fileWriteStreamHandler property to upload the file stream directly.

export const method2 = (

req: NextApiRequest | IncomingMessage,

res: NextApiResponse | Response

) => {

// @ts-ignore

const form = formidable({ fileWriteStreamHandler: uploadStream });

form.parse(req, () => {

res.status(200).json("File upload complete");

});

};

And in our uploadStream function we must create a new PassThrough instance. A PassThrough stream is necessary so that formidable can write to the stream, and we can still pipe it to GCS.

// ...

import { PassThrough } from "stream";

const uploadStream = (file: formidable.File) => {

const pass = new PassThrough();

const stream = gcs.createWriteStream(

file.originalFilename ?? file.newFilename,

file.mimetype ?? undefined

);

pass.pipe(stream);

return pass;

};

// ...

3. Custom Express server

If the use of API routes limits the needs of your application, NextJS allows you to create a custom server. The only downside is that you can no longer use Vercel.

To get started, we will use express to set up our backend server and define the upload route.

npm i express dotenv

npm i -D nodemon @types/express ts-node

Ensure that Typescript is configured with the server file so we can still import our file upload methods.You can find a working example at the NextJS repository. In short, we use an extra tsconfig file, watch file changes with nodemon and executes Typescript with ts-node.

Let's finish this article by adding our server code with the endpoint.

require("dotenv").config();

import { createServer } from "http";

import next from "next";

import express from "express";

import { method2 } from "./lib/upload";

const port = parseInt(process.env.PORT || "3000", 10);

const dev = process.env.NODE_ENV !== "production";

const nextApp = next({ dev });

const handle = nextApp.getRequestHandler();

const app = express();

const server = createServer(app);

app.use(express.json());

(async () => {

await nextApp.prepare();

app.post("/api/upload", (req, res) => {

method2(req, res);

});

app.all("*", (req, res) => {

return handle(req, res);

});

server.listen(port, () => {

console.log(`> Ready on http://localhost:${port}`);

});

})();

Wrapping up

Today we learned three different ways to upload a user's files to Google Cloud Storage. Each with its own tradeoffs.

And that's all! If you liked this article, consider following me for more.

You can find all the code for this article here on GitHub.