Table of contents

Introduction

Hello everyone.

In this article, I will show you how to create an all-in-one blog manager system. We will use Python, a high-level dynamically typed language, and GitHub Actions, a continuous integration system developed by GitHub.

Setting the Stage

Imagine having to publish to multiple platforms. Sure, posting to the first is simple.

But, with some experience, I can say that the "Import post" features for these platforms aren't perfect.

Yet, if you persist, you will find yourself spending lots of time reformatting blocks of text, using some service to upload blocks of code (for example), and maybe even having to re-upload every single image.

Now do you see the problem?

So what better way to optimise your publishing than to create a GitHub-hosted CMS? Even better when automated by a Python-powered GitHub Actions script!

Understanding GitHub Actions

The essence of GitHub Actions is best explained in the official docs. There you will become familiar with workflows, actions, and runners, all of which are essential for our system to function.

System Overview

A user’s blog will be a GitHub repository with a workflow that makes use of our blog publishing action. The repo will contain folders for each of the user’s posts. Each post folder will contain the content of the article.md, along with any images.

We will use the ImgBB API to upload our images and use the uploaded URL with both platforms.

Also, the post’s cover image can be included, which will be automatically configured for the publishing platform API.

But where does Python fit into all of this? Well, because Python isn’t officially supported as a language to create an action, we must use a composite action to run a Python script.

But this does mean that there are no toolkit packages like there are for JavaScript. So, we must make direct communication with the GitHub API from the Python code.

Preparing the Action Folder

Let’s start by creating a new Python project, using Poetry. Poetry is a dependency management system for Python, similar to Node JS’s NPM:

poetry new blog-manager-action

This should create a folder named blog-manager-action. Within will be a folder named blog_manager_action. The Python code will live there.

Now we can install all the required dependencies:

poetry add pygithub requests python-dotenv python-frontmatter

Building Action!

Our blog publisher action will require the platform integration tokens & API keys.

Now, we can begin to define the action file:

name: "Blog Manager Action"

description: "A GitHub Action that uploads articles to your blogging platform"

inputs:

medium_integration_token:

description: "Medium's Integration Token. Token can be retrieved at medium.com/me/settings/security, under 'Integration tokens'"

required: true

hashnode_integration_token:

description: "Hashnode's Integration Token. Token can be retrieved at hashnode.com/settings/developer"

required: true

hashnode_hostname:

description: "Hostname for Hashnode blog. e.g. cs310.hashnode.dev"

required: true

hashnode_publication_id:

description: "Publication ID for Hashnode blog. Appears in your Blog Dashboard page URL"

required: true

github_token:

description: "A GitHub PAT"

required: true

imgbb_api_key:

description: "API Key for Imgbb CDN"

required: true

outputs:

hashnode_url:

description: "URL of the Hashnode Post"

value: ${{ steps.run-script.outputs.hashnode_url }}

medium_url:

description: "URL of the Medium Post"

value: ${{ steps.run-script.outputs.medium_url }}

runs:

using: "composite"

steps:

...

Preventing Catastrophe

But before we go into the specific steps, I need to address an issue.

The action is meant to run when the repository has been pushed to the remote. But, the system must acknowledge that the user has published a post by updating the frontmatter. And, updating the frontmatter will lead to another push to the remote.

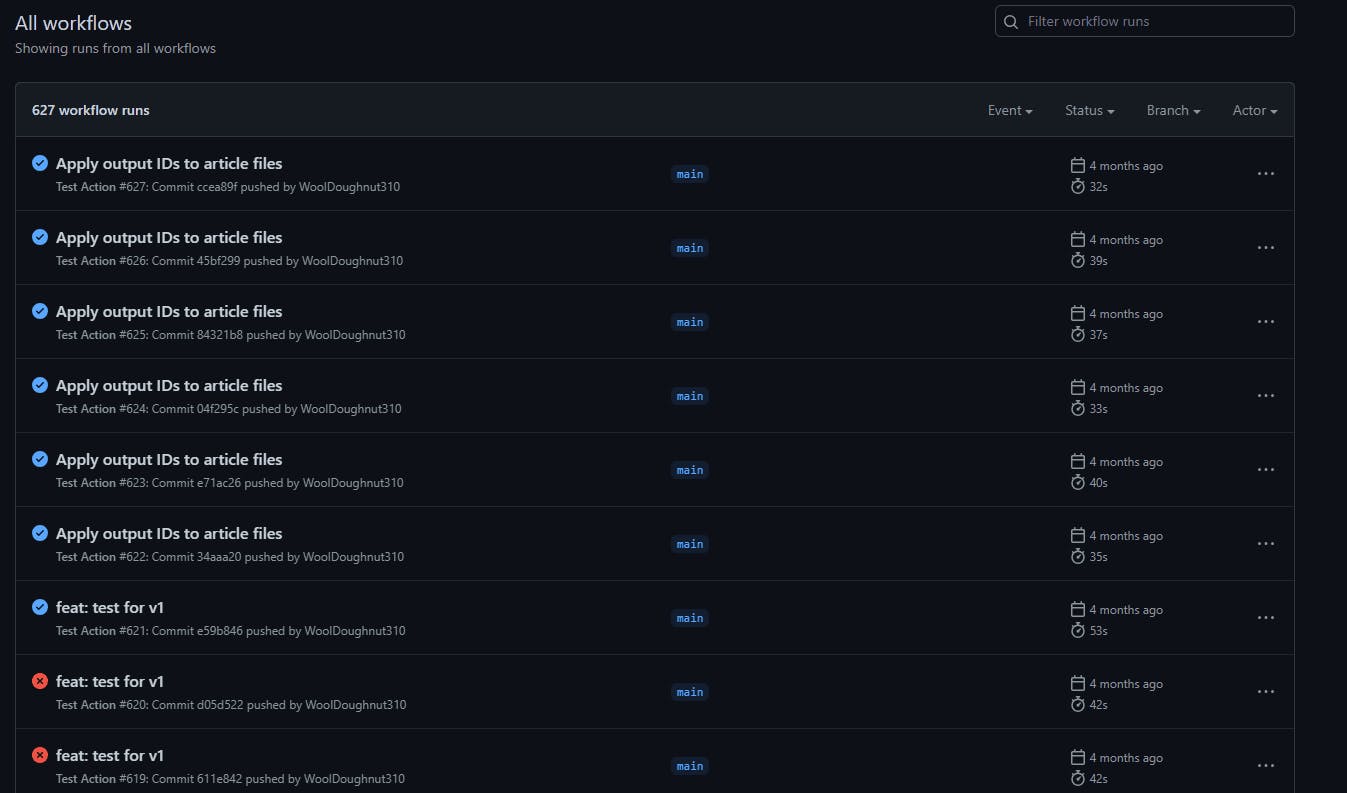

So, to prevent endless triggering of the action, we can stop the action from running if we know it was the action itself that made the push.

And how do we do that? We can decide to not run the Python script if the commit message that generated the push isn’t CI_COMMIT_MESSAGE.

- name: Configures Auto-Commit message in environment

run: echo "CI_COMMIT_MESSAGE=Apply output IDs to article files" >> $GITHUB_ENV

shell: bash

- name: Set environment variable "is-auto-commit"

if: github.event.commits[0].message == env.CI_COMMIT_MESSAGE

run: echo "is-auto-commit=true" >> $GITHUB_ENV

shell: bash

- name: Display Github event variable "github.event.commits[0].message"

run: echo "last commit message = ${{ github.event.commits[0].message }}"

shell: bash

- name: Display environment variable "is-auto-commit"

run: echo "is-auto-commit=${{ env.is-auto-commit }}"

shell: bash

Now we can include Python, Poetry, and dependencies, and setup the Python project:

- name: Install Python

id: setup-python

uses: actions/setup-python@v4

with:

python-version: "3.9"

- name: Install Poetry

uses: snok/install-poetry@v1.3.3

with:

virtualenvs-create: true

virtualenvs-in-project: true

installer-parallel: true

- name: Load cached venv

id: cached-poetry-dependencies

uses: actions/cache@v3

with:

path: .venv

key: venv-${{ runner.os }}-${{ steps.setup-python.outputs.python-version }}-${{ hashFiles('**/poetry.lock') }}

- name: Install dependencies

if: steps.cached-poetry-dependencies.outputs.cache-hit != 'true'

run: poetry install --no-interaction --no-root

shell: bash

- name: Install project

run: poetry install --no-interaction

shell: bash

Another drawback of using Python for GH action scripting is that the action inputs are not included automatically.

- name: Pass Inputs to Shell

run: |

echo "MEDIUM_INTEGRATION_TOKEN=${{ inputs.medium_integration_token }}" >> $GITHUB_ENV

echo "HASHNODE_INTEGRATION_TOKEN=${{ inputs.hashnode_integration_token }}" >> $GITHUB_ENV

echo "HASHNODE_HOSTNAME=${{ inputs.hashnode_hostname }}" >> $GITHUB_ENV

echo "HASHNODE_PUBLICATION_ID=${{ inputs.hashnode_publication_id }}" >> $GITHUB_ENV

echo "IMGBB_API_KEY=${{ inputs.imgbb_api_key }}" >> $GITHUB_ENV

echo "GITHUB_TOKEN=${{ inputs.github_token }}" >> $GITHUB_ENV

echo "GITHUB_REPOSITORY=${{ github.repository }}" >> $GITHUB_ENV

echo "GITHUB_SHA=${{ github.sha }}" >> $GITHUB_ENV

shell: bash

Finally, we can conditionally run the script.

- name: run-script

if: env.is-auto-commit == false

run: |

source .venv/bin/activate

python blog_manager_action/main.py

shell: bash

Python Scripting

Here’s the high-level overview of the Python code, in main.py:

from connections import get_last_commit

from extract_article_folders import extract_article_folders

from publish_article import publish_article

commit = get_last_commit()

# Find the root folder of each committed file

# i.e. the one containing an "article.md"

folders = extract_article_folders(commit.files)

for folder in folders:

publish_article(folder)

Connections

We can include all of our shared variables for access to the GitHub repo in connections.py:

from github import Github

from github import Auth

import os

_auth = _gh = _repo = _last_commit = None

def get_auth():

global _auth

if not _auth:

_auth = Auth.Token(os.environ.get("GITHUB_TOKEN"))

return _auth

def get_github():

global _gh

if not _gh:

_gh = Github(auth=get_auth())

return _gh

def get_repo():

global _repo

if not _repo:

_repo = get_github().get_repo(os.environ.get("GITHUB_REPOSITORY"))

return _repo

def get_last_commit():

global _last_commit

if not _last_commit:

_last_commit = get_repo().get_commit(sha=os.environ.get("GITHUB_SHA"))

return _last_commit

Extracting the Folder Containing an Article

Here we take each changed file and bubble up the directory until we meet an article.md to publish:

from connections import get_repo

from github import UnknownObjectException

def extract_article_folders(files):

repo = get_repo()

folders = []

for file in files:

parts = file.filename.split("/")

for i in range(len(parts) - 2, -1, -1):

# Reconstruct the folder path

folder = "/".join(parts[0 : i + 1])

try:

dir_contents = repo.get_contents(folder)

# If `article.md` exists within the directory

if any(

["article.md" in file_content.name for file_content in dir_contents]

):

folders.append(folder)

break

except UnknownObjectException:

pass

return folders

Publishing An Article

We shall begin publish_article.py with imports and constants.

The imports and constants:

from connections import get_repo

import requests

import os

import mimetypes

import frontmatter

from hashnode import publish_hashnode

from medium import publish_medium

import re

COVER_IMAGE_NAME = "cover.png"

Image Uploading

First, define the function get_image_links to parse the images within the folder:

def get_image_links(files):

# Mapping of image file names to uploaded URLs

urls = {}

for file in files:

# Filter out non-image filetypes

file_type = mimetypes.guess_type(file.name)[0]

if file_type == None or not file_type.startswith("image/"):

continue

# Upload file content to CDN

res = requests.post(

"<https://api.imgbb.com/1/upload>",

{

"key": os.environ.get("IMGBB_API_KEY"),

"image": file.content,

},

)

# Throw error if status code != 200

res.raise_for_status()

urls[file.name] = res.json()["data"]["url"]

return urls

Including Uploaded Images

The function replace_image_links will search for any image link in the article markdown content, and replace it with the Imgbb URL:

def replace_image_links(markdown, images):

new_content = markdown

image_names = [re.escape(name) for name in images.keys()]

# e.g.

MARKDOWN_IMAGE = re.compile(

rf'!\\[[^\\]]*\\]\\(({"|".join(image_names)})\\s*((?:\\w+=)?"(?:.*[^"])")?\\s*\\)'

)

re_match = MARKDOWN_IMAGE.search(new_content)

while re_match != None:

# Replace URL

new_content = (

new_content[: re_match.start(1)]

+ images[re_match[1]]

+ new_content[re_match.end(1) :]

)

re_match = MARKDOWN_IMAGE.search(new_content)

return new_content

Wrapping It All Up

publish_article ties everything together.

We publish the formatted markdown content to each platform. Then, we add the published URLs to the action’s output.

Now is also when we use CI_COMMIT_MESSAGE to indicate that the article has been uploaded:

def publish_article(folder):

repo = get_repo()

contents = repo.get_contents(folder)

article_file = next(file for file in contents if file.name == "article.md")#

article = article_file.decoded_content.decode()

# Upload all the images within the folder to the CDN

images = get_image_links(contents)

# Replace all image references with their uploaded CDN URLs

article = replace_image_links(article, images)

# Tag `.metadata` onto article, using frontmatter

article = frontmatter.loads(article)

# Publish to blogging platforms

cover_image_url = images.get(COVER_IMAGE_NAME)

hashnode_url = publish_hashnode(article, cover_image_url)

print(f"Published to Hashnode at {hashnode_url}")

print(f"::set-output name=hashnode_url::{hashnode_url}")

medium_url = publish_medium(article, cover_image_url)

print(f"Published to Medium at {medium_url}")

print(f"::set-output name=medium_url::{medium_url}")

article["is_published"] = True

result = repo.update_file(

f"{folder}/article.md",

os.environ.get("CI_COMMIT_MESSAGE"),

frontmatter.dumps(article),

article_file.sha,

)

new_commit = result["commit"].sha

# Push to remote

head = repo.get_git_ref("heads/main")

head.edit(new_commit)

Using Hashnode

We use the Hashnode API to publish markdown content to Hashnode.

def publish_hashnode(article, cover_image_url=None):

query = """mutation CreateStory($input: CreateStoryInput!) {

createStory(input: $input) {

code

success

message

}

}"""

# Send an UPDATE if the post has already been published

if article.get("is_published") and "hashnode_id" in article.keys():

query = query.replace(

"createStory(", f'updateStory(postId: "{article["hashnode_id"]}", '

)

variables = {

"input": {

"title": article["title"],

"slug": article.get("slug"),

"contentMarkdown": article.content,

"tags": article.get("hashnode_tags", []),

"isPartOfPublication": { "publicationId": os.environ.get("HASHNODE_PUBLICATION_ID") }

}

}

# Accept a cover image, if available

if cover_image_url:

variables["input"]["coverImageURL"] = cover_image_url

if "canonical_url" in article.keys():

variables["input"]["isRepublished"] = { "originalArticleURL": article["canonical_url"] }

res = requests.post(

"<https://api.hashnode.com>",

json={

"query": query,

"variables": variables,

},

headers={"Authorization": os.environ.get("HASHNODE_INTEGRATION_TOKEN")},

)

json = res.json()

if json.get("errors") and len(json["errors"]) > 0:

exit(", ".join([e["message"] for e in json["errors"]]))

return f'https://{os.environ.get("HASHNODE_HOSTNAME")}'

Using Medium

Start by importing the necessary dependencies at the top of medium.py:

import requests

import os

from connections import get_github

from urllib.parse import urlparse

from github import InputFileContent

from re import compile

Medium publishing is slightly different. From my experience, code blocks are not imported nicely. So we will use GitHub Gists to host the code blocks.

def gistify_code_blocks(markdown):

gh = get_github()

user = gh.get_user()

new_content = markdown

MARKDOWN_CODE_BLOCK = compile(r"```(?:.+)?#(.+)\\n((?:.|\\n)+?)\\n+```")

re_match = MARKDOWN_CODE_BLOCK.search(new_content)

while re_match != None:

# Create a gist with the code block content and filename

gist = user.create_gist(True, {

re_match[1]: InputFileContent(re_match[2])

})

# Replace the code block in the markdown with the uploaded URL

new_content = (

new_content[: re_match.start()]

+ gist.html_url

+ new_content[re_match.end():]

)

re_match = MARKDOWN_CODE_BLOCK.search(new_content)

return new_content

Also, Medium articles display a “Originally published at …” at the end of the article, which includes the canonical URL (the original address).

Define a function create_canonical_reference that returns such display, in markdown format:

def create_canonical_reference(url):

if url == None:

return ""

parsed_url = urlparse(url)

base_url = f"{parsed_url.scheme}://{parsed_url.hostname}"

# e.g. ... at [cs310.hashnode.dev](<https://cs310.hashnode.dev/post-4>)

return f"\\n\\n---\\n\\n*Originally published at [{base_url}]({url}).*"

Publishing

Note that the Medium API requires even the title to be in the markdown content. So, if this is the first time the user is

Now we can publish. But first, only when the post is being published for the first time, can we add the article title. This is because the Medium API requires that this be in the markdown content, unlike Hashnode.

def publish_medium(article, cover_image_url=None):

user_id = get_user_id()

# Enrich the markdown content with title, cover image & canonical reference

def transform_content(article):

content = gistify_code_blocks(article.content)

if not article.get("is_published"):

content = f"# {article['title']}\\n" + content

if cover_image_url:

content = f"\\n" + content

if "canonical_url" in article.keys():

content += create_canonical_reference(article["canonical_url"])

return content

res = requests.post(

f"<https://api.medium.com/v1/users/{user_id}/posts>",

headers={

"Authorization": f"Bearer {os.environ.get('MEDIUM_INTEGRATION_TOKEN')}"

},

json={

"title": article["title"],

"contentFormat": "markdown",

"content": transform_content(article),

"tags": article.get("medium_tags", []),

"canonicalUrl": article.get("canonical_url"),

"publishStatus": "public",

},

)

json = res.json()

if json.get("errors") and len(json["errors"]) > 0:

exit(json["errors"][0]["message"])

return json["data"]["url"]

Sample Workflow

Here is a sample workflow that would use the action.

name: Test Action

on: push

jobs:

run-script:

runs-on: ubuntu-latest

name: Returns the published URL

steps:

- name: Checkout

uses: actions/checkout@v3

- name: Run publishing script

id: execute

uses: WoolDoughnut310/blog-manager-action@main

with:

medium_integration_token: ${{ secrets.medium_integration_token }}

hashnode_integration_token: ${{ secrets.hashnode_integration_token }}

hashnode_hostname: ${{ secrets.hashnode_hostname }}

hashnode_publication_id: ${{ secrets.hashnode_publication_id }}

github_token: ${{ secrets.token }}

imgbb_api_key: ${{ secrets.imgbb_api_key }}

Note that each credential must be added to the secrets area on the GitHub repo. Also, the repo must have Read & Write access for the contents to be updated (pushed to).

Bonus: Submodules

Another additional feature would be for users to include their code repositories within this blog repository. So the blog repository forms almost a mega repository.

Well, this isn’t much a feature of our system, but a feature built into Git.

Let's say the user has a code repo at REPO_URL and they feature snippets of it as part of their blog post.

They can include the repo into their larger repository under the folder name project with the following command:

git submodules add REPO_URL project

Conclusion

And that’s all for today’s project. I hope you’ve seen the potential GitHub actions have for producing a comprehensive blog management system.

Perhaps it may be useful for those wanting to start their blogging journey.

If you liked this post, comment on your own experiences and stick around for more!

You can find the code for this article on GitHub.

References

GitHub Actions documentation - GitHub Docs

Most effective ways to push within GitHub Actions | Johtizen

Shipyard | Writing Your First Python GitHub Action

Git - Submodules (git-scm.com)

Medium/medium-api-docs: Documentation for Medium's OAuth2 API (github.com)

PyGithub — PyGithub 2.1.0 documentation

What exactly is Frontmatter? (daily-dev-tips.com)

Author’s Note

I did get the infinite recursion of repo pushes and workflow runs!

One drawback of using Medium is that users can’t edit their articles through the API. Plus, it has been deprecated for some time now.