Identify Music With Your AI-Powered Assistant

Use ACRCloud to record and identify audio with NodeJS and JavaScript

Hello and welcome!

In the last article, we used Howler.js to control audio playback. Today, we'll use the ACRCloud API to perform music identification, like SoundHound.

As a result, we'll be able to give a command such as "What's this song?" and get back a response.

The video below shows this in action:

Setting up

If you missed the last article, read it to understand how we add new command handlers.

But if you want to skip forward, I'd recommend at least reading the first part of the series.

You can find the starter code for this article here.

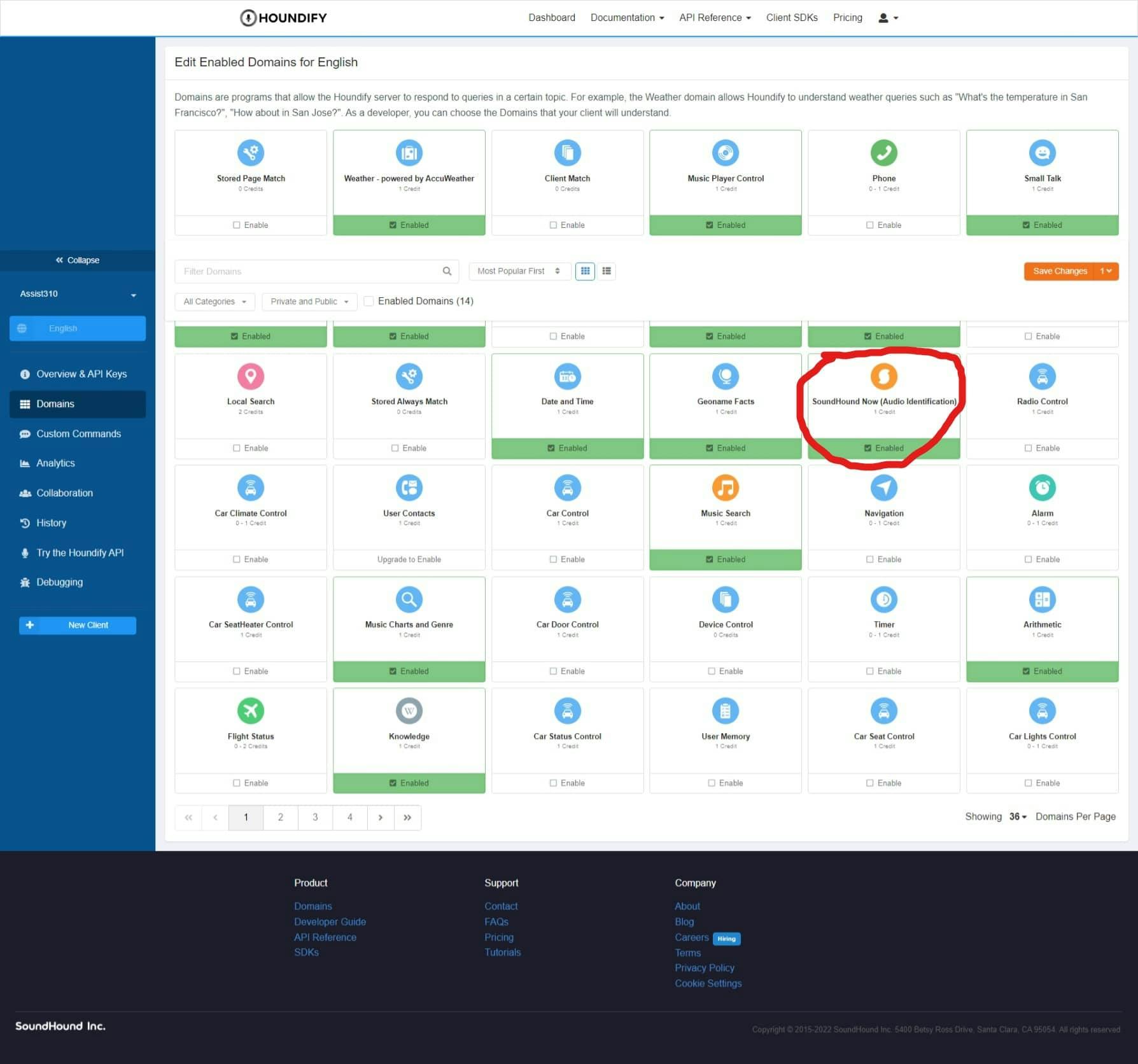

First, enable the SoundHoundNow domain.

Enable and click Save Changes in the top right. This domain will enable the commands for music identification.

Handling the Music Identification Command

Create a new handler file, as seen in the last article. Name it SoundHoundNowCommand.ts.

Add the following code to the top of the file:

import axios from "axios";

import { toast } from "react-toastify";

import say from "../lib/say";

const SUCCESS_RESULT = "SingleTrackWithArtistResult";

const FAILED_RESULT = "NoMatchResult";

const STARTING_RESULT = "StartSoundHoundSearchResult";

const LISTEN_DURATION = 15000;

const sleep = (duration: number) =>

new Promise((resolve) => setTimeout(resolve, duration));

STARTING_RESULT defines a result that contains "Listening..." as a response. We output that response to Text-To-Speech once we record audio.

We'll define a function named handleACRCommand. We call it to start listening and return a result.

const handleACRCommand = async (result: any) => {

try {

say(result[STARTING_RESULT].SpokenResponseLong);

// Wait for speech to finish before recording

await sleep(2000);

const toastID = toast("Listening...", {

type: "info",

autoClose: LISTEN_DURATION,

});

const audioBlob = await recordAudio(LISTEN_DURATION);

toast.dismiss(toastID);

const body = new FormData();

body.append("file", audioBlob);

const response = await axios.post(`/acr-identify`, body, {

timeout: 30000,

});

const data = response.data;

let SpokenResponseLong = result[SUCCESS_RESULT].SpokenResponseLong;

SpokenResponseLong = SpokenResponseLong.replace(

"%result_title%",

data.title

).replace("%result_artist%", data.artist);

return { ...result[SUCCESS_RESULT], SpokenResponseLong };

} catch (e) {

return result[FAILED_RESULT];

}

};

Let's break this down.

const handleACRCommand = async (result: any) => {

try {

say(result[STARTING_RESULT].SpokenResponseLong);

// Wait for speech to finish before recording

await sleep(2000);

First, we "say" the result and wait for the speech to finish. Note that we would first have to extract our say function from App.tsx into a new file.

...

const toastID = toast("Listening...", {

type: "info",

autoClose: LISTEN_DURATION,

});

const audioBlob = await recordAudio(LISTEN_DURATION);

toast.dismiss(toastID);

Then, we display a toast to alert the user whilst recording the audio.

...

const body = new FormData();

body.append("file", audioBlob);

const response = await axios.post(`/acr-identify`, body, {

timeout: 30000,

});

We then send the audio data to the server with the name "file".

...

const data = response.data;

let SpokenResponseLong = result[SUCCESS_RESULT].SpokenResponseLong;

SpokenResponseLong = SpokenResponseLong.replace(

"%result_title%",

data.title

).replace("%result_artist%", data.artist);

The server identifies the audio and returns the data. We then use the data to format the response.

...

return { ...result[SUCCESS_RESULT], SpokenResponseLong };

} catch (e) {

return result[FAILED_RESULT];

}

}

We return the result with our new spoken response.

And don’t forget to add our new file to the handlers array in index.ts.

const COMMANDS = ["MusicCommand", "MusicPlayerCommand", "SoundHoundNowCommand"];

Using the ACRCloud API

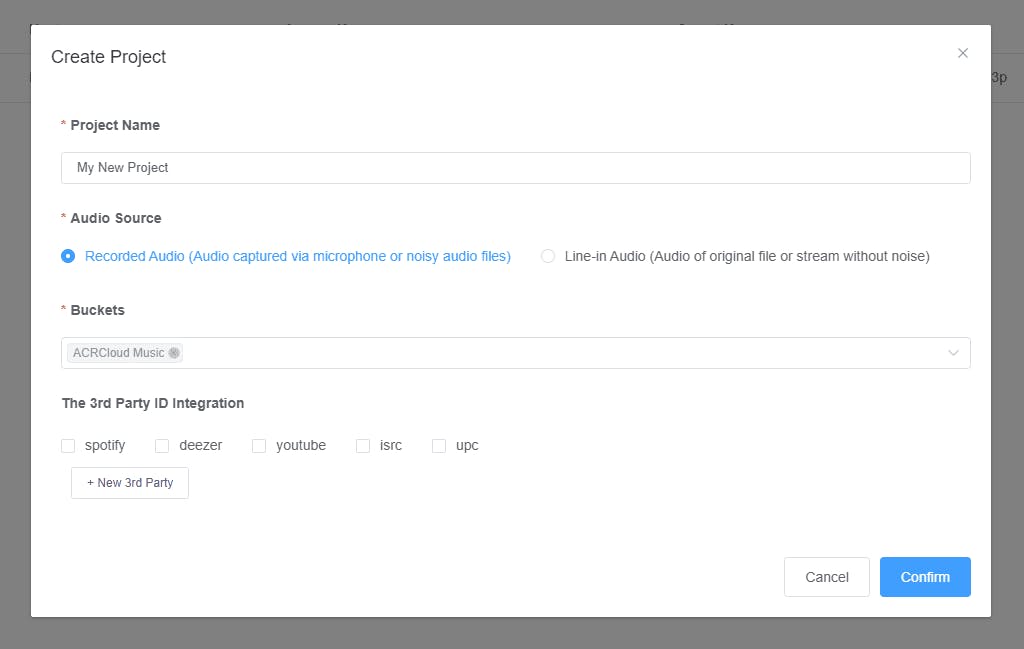

First, we need to get our API credentials. Sign up at acrcloud.com and create an Audio & Video Recognition project.

Now install arcloud from NPM to access the API. We also need formidable to parse the audio file upload.

npm i acrcloud formidable @types/formidable

Now add your credentials to the end of the .env file like so:

...

ACRCLOUD_HOST=identify-eu-west-1.acrcloud.com

ACRCLOUD_ACCESS_KEY=*****

ACRCLOUD_SECRET=*****

Initialising ACRCloud instance

Open up server.js and import the necessary packages.

const acrcloud = require("acrcloud");

const formidable = require("formidable");

Initialise an ACRCloud instance with your credentials.

const acr = new acrcloud({

host: process.env.ACRCLOUD_HOST,

access_key: process.env.ACRCLOUD_ACCESS_KEY,

access_secret: process.env.ACRCLOUD_SECRET,

});

Adding the Music Identification API Route

Define an API route to identify audio files. First, parse file uploads and read the path to the file uploaded. Then, send the file to ACRCloud and return the track's title and artist(s).

Here is the code for the route.

app.post("/acr-identify", async function (req, res) {

try {

const form = formidable();

const { files } = await new Promise((resolve, reject) =>

form.parse(req, (err, fields, files) => {

if (err) {

reject(err);

return;

}

resolve({ fields, files });

})

);

const filePath = files.file.filepath;

const metadata = await identifyACR(filePath);

const title = metadata.title;

const artist = metadata.artists.map((artist) => artist.name).join(", ");

res.status(200).json({ title, artist });

} catch (error) {

res.status(500).send(error);

}

});

Identifying Audio Files

Define a function to read the file from the path and return the metadata for the song. We throw an error if ACRCloud returns an error.

const identifyACR = async (filePath) => {

const fileData = await fs.readFile(filePath);

const data = await acr.identify(fileData);

if (data.status.code !== 0) {

throw new Error(data.status.msg);

}

const metadata = data.metadata;

if (metadata.music?.length > 0) {

return metadata.music[0];

}

throw new Error("No music found");

};

Conclusion

That's all for now. You can find all the code for this article here.

Next time we'll use the Houndify API to create custom commands. This way, we can implement our own Pokedex using the Pokemon API. Stay tuned!

Resources

https://github.com/node-formidable/formidable

https://medium.com/@bryanjenningz/how-to-record-and-play-audio-in-javascript-faa1b2b3e49b